Fine tuning Meta's LLaMA 2 on Lambda GPU Cloud

This blog post provides instructions on how to fine tune LLaMA 2 models on Lambda Cloud using a $0.60/hr A10 GPU.

For fine-tuning a LLaMA model, which cloud GPU provider to use? runpod or lambda labs or …? : r/LocalLLaMA

Mike Mattacola en LinkedIn: Train a Foundation Model on Lambda's Cloud

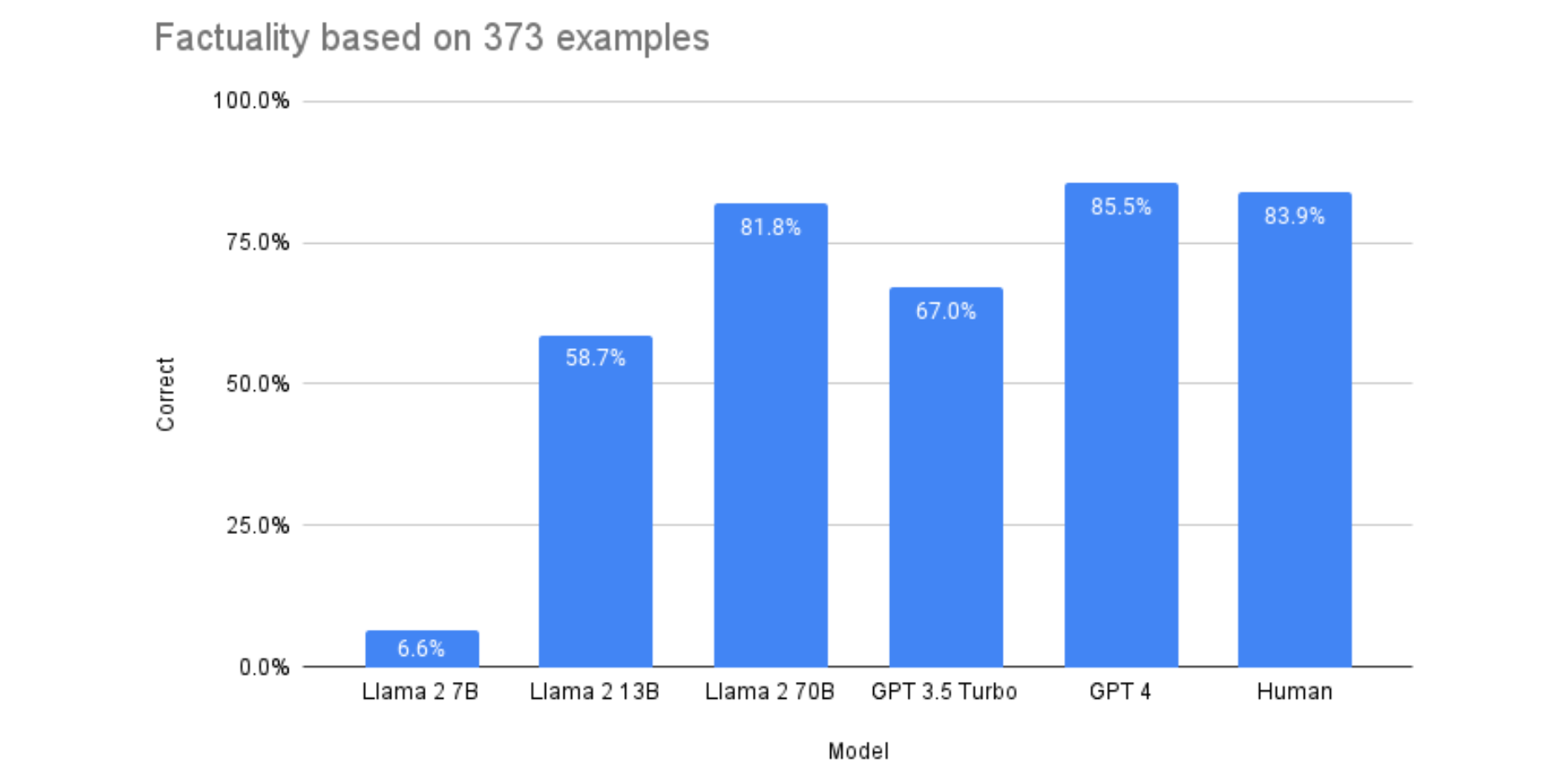

Llama 2 vs. GPT-4: Nearly As Accurate and 30X Cheaper

Mitesh Agrawal on LinkedIn: GitHub - huggingface/community-events: Place where folks can contribute to…

How to Install Llama 2 on Your Server with Pre-configured AWS Package in a Single

Fine-Tuning LLaMA 2 with SageMaker JumpStart

Mitesh Agrawal on LinkedIn: Training YoloV5 face detector on Lambda Cloud

Mike Mattacola posted on LinkedIn

Fine-tune Llama-2 with SageMaker JumpStart, by Michael Ludvig

How to Seamlessly Integrate Llama2 Models in Python Applications Using AWS Bedrock: A Comprehensive Guide, by Woyera

Fine-tune Llama 2 for text generation on SageMaker JumpStart

Fine-Tuning LLaMA 2 Model: Harnessing Single-GPU Efficiency with QLoRA, by AI TutorMaster

Fine-Tuning LLaMA 2 Model: Harnessing Single-GPU Efficiency with QLoRA, by AI TutorMaster

The Lambda Deep Learning Blog